Text Checker Tutorial (Part 1)

by ryan | April 18, 2023

What would you NOT want to send to your boss?

an NLP classification tutorial where we build a Naive Bayes Classiyer from scratch; Find GitHub repo here

Have you ever accidentally sent a text or an email to the wrong person? What if we could create a program/algorithm that could somehow dectect messages that contain potentially harmful, personal, or sensitive messages and display a confirmation check to the user asking something like "are you sure you want to send this message?" In this part, part 1, we will create Naive Bayes classifier to try to do this and learn a lot along the way!

This tutorial is divided into the following sections:

Goal

It's a good idea to know what we are trying to achive before starting, so let's begin with the end in mind. The end goal is to develop an algorithm/model that can detect messages that a user might NOT want to send to someone. Being more specific, we want a model that takes in a string as input and outputs either a 0 (meaning safe) or 1 (meaning potentially harmful).

If you are new to classification tasks, it is good to note that it is common to call the class you are trying to detect positive, even if it represents a negative situation. For example, when testing for cancer, the positive class indicates that the patient has cancer, despite cancer being a very negative outcome. I follow this convention in this project. So, 0, the representing the negative class, means that the text message is most likely safe to send, and 1, representing the positive class, means that the message could be harmful to send.

Below is an example of each class.

Positive Example:

input: 'I hate this job.'

Model's expected output: 1.

Decision: DISPLAY confirmation message to user 'Are you sure you want to send this message?'

Negative Example:

input: 'Good luck with the presentation today!'

Model's expected output: 0.

Decision: DO NOT DISPLAY confirmation message to user

Data Collection

The data I use is a toy dataset I created by prompting ChatGPT with messages such as in the screenshot below: 'give me example text messages I would not want to send to the wrong person.'

I do this multiple times, varying the prompt slightly each time, and also get negative examples by prompting with messages such as 'give me 20 examples of text messages that would be normal to send to my teacher.' I collect these into 2 text files that can be found on the github page for this project: one for the positive data, (potentially harmful messages), and another for the negative data (messeges that are potentially safe to send).

Data Prep and Exploration

Now that we have the data, let's take the time to familiarize ourselves with it before diving into building the classifier. Understanding the data will provide us with valuable insights into what we are working with and how we need to clean up or preprocess the data.

Imports:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt # make figs.

import re

%matplotlib inline

First let's read in the data:

pos_sents = open('pos_sentences.txt', 'r').read().splitlines() # read in data line-by-line

neg_sents = open('neg_sentences.txt', 'r').read().splitlines()

neg_sents[:4] # this line outputs the first 4 lines

You will notice a few things: 1) for the negative sentences ChatGPT puts in placeholders for names that looks something like [boss/coworker], [boss], or [coworker], and 2) there are empty lines ''. We should remove these as the empty lines are not part of the data (just a result from copy and pasting from ChatGPT's output) and we should remove the placeholders as these could introduce bias to the classifier (By introducing bias, I mean that the classifier could just learn to detect when there is a placeholder and output a 0 instead of learning to take into account the whole message.)

Remove empty lines using list comprehension:

pos_sents = [s for s in pos_sents if s]

neg_sents = [s for s in neg_sents if s]

Remove placeholders using regular expressions and list comprehension:

neg_sents = [re.sub('.\[(.*?)\].', '', s) for s in neg_sents]

Spit data

In Machine Learing tasks (such as building a classifier), it is common to split the data into 2 or 3 datasets to test how well your algorithm can generalize to unseen or novel data . The first is for training, and the other is for validation (and the third would be for final testing, but since this is just a tutorial with a small dataset, I do not do this third split). Note: you should do data processing only after you have split the data to prevent data leakage, but in this case we did do some minimal data cleaning before splitting (this is okay in this case because it is a toy dataset and we were trying to make it more realistic by removing placeholders).

train_pos = pos_sents[:int(len(pos_sents) * .8)]

val_pos = pos_sents[int(len(pos_sents) * .8):]

train_neg = neg_sents[:int(len(neg_sents) * .8)]

val_neg = neg_sents[int(len(neg_sents) * .8):]

print('Train pos: ', len(train_pos))

print('Val pos: ', len(val_pos))

print('Train neg: ', len(train_neg))

print('Val neg: ', len(val_neg))

# Expected output

Train pos: 101

Val pos: 26

Train neg: 76

Val neg: 20

Data Exploration

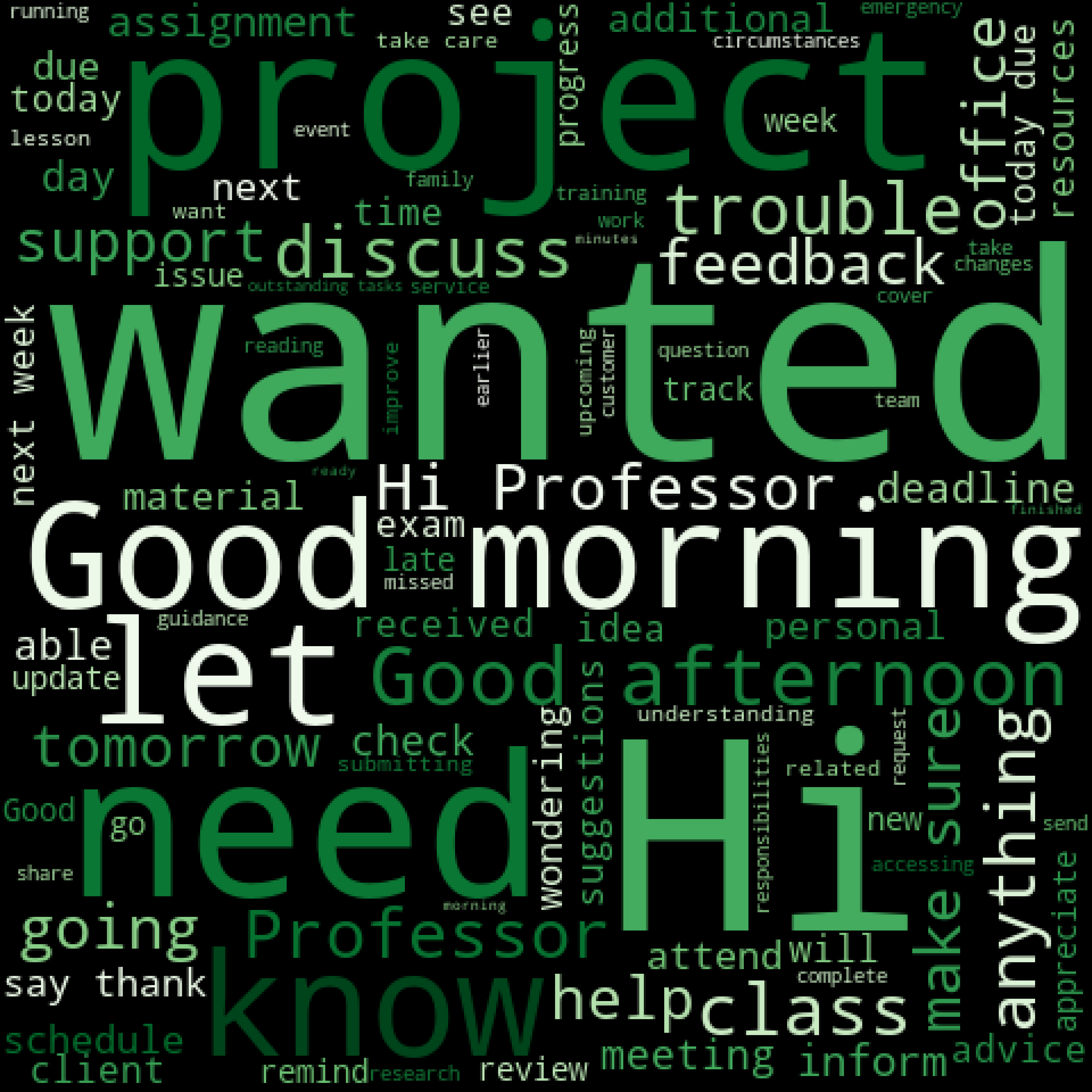

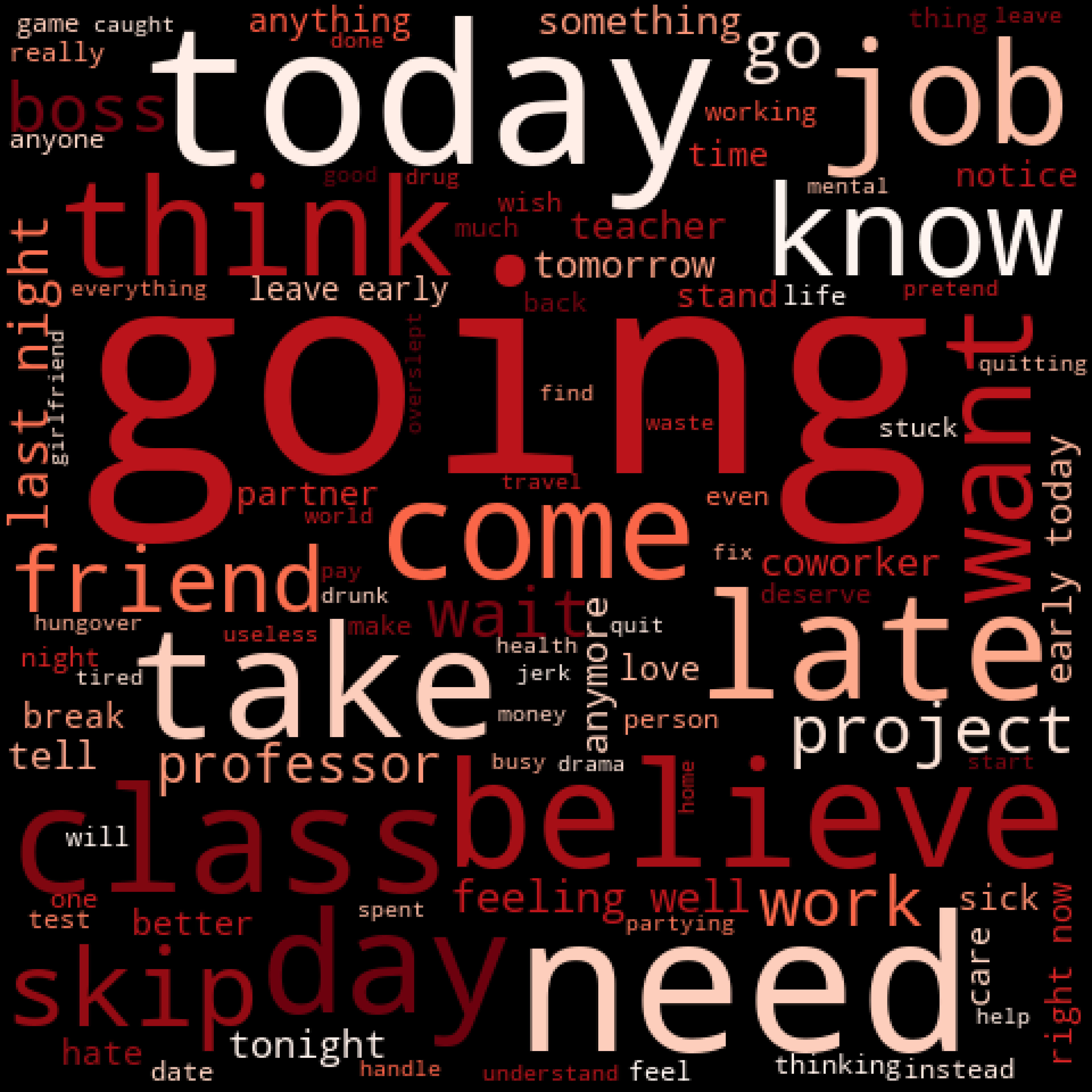

Now with our data split into training and validation sets lets do some exploration using just the training set. One fun way to tak a look at text data is to look at Word Clouds where the more frequently it shows up in the data, the bigger the word will be in the image.

To create the word clouds I join all the data from the same class together:

pos_all = ' '.join(train_df[train_df.target == 1].text)

neg_all = ' '.join(train_df[train_df.target == 0].text)

Then I look at these bodies of text as 2 word clouds (one for message you would want to send, and one for messages that could be harmful).

from wordcloud import WordCloud

# positive class word cloud

cloud = WordCloud(max_words=100,width=480, height=480, background_color='black', colormap='Reds')

cloud.generate_from_text(pos_all)

# negative class

# cloud = WordCloud(max_words=100,width=480, height=480, background_color='black', colormap='Greens')

# cloud.generate_from_text(neg_all)

plt.figure(figsize=(12,8), dpi=100)

plt.imshow(cloud)

plt.axis('off')

# to save figures as png files

# plt.savefig('pos_sent_cloud.png', bbox_inches='tight', pad_inches=0, dpi=1000)

# plt.savefig('neg_sent_cloud.png', bbox_inches='tight', pad_inches=0, dpi=1000)

# display in jupyter notebook

plt.show()

While the word clouds have some words in common, we can see there are some differences between the classes. In the positive class we have skip (bottom left )and sick (bottom right), whereas in the negative class we have greetings and more professional sounding words like good, morning, afternoon, support, discuss, and feedback.

Another simple way to look at the data is to look at the most common words. Here are the top 10 words with their count (how many times the word appears in the training dataset) for the positive class.

from collections import Counter

pos_frequencies = Counter(pos_all.split())

for word, freq in pos_frequencies.most_common(10):

print("{}\t{}".format(word, freq))

# Expected output:

# I 114

# to 80

# I'm 59

# going 35

# a 35

# this 25

# the 24

# can't 22

# my 20

# have 19

And for the negative class.

neg_frequencies = Counter(neg_all.split())

for word, freq in neg_frequencies.most_common(10):

print("{}\t{}".format(word, freq))

# Expected output:

# to 92

# the 71

# I 66

# you 62

# a 41

# Hi 36

# for 36

# wanted 35

# I'm 25

# be 25

Just as with the Word Clouds, we can see a difference some of the most common words. However, we notice that there are some words like the, a, and to that don't cary to much meaning but are very prevalent in both classes. This leads me to my last step in our data exploration.

In this last step, I process the next to remove all punctuation, stopwords (like a and the), and lemmatize the words (ex. dogs -> dog). The purpose of this is to make the task of classification less complex by remove characters and words that arguably don't cary much meaning and by reducing words with the same root to their lemma (i.e. their most basic or dictionary form).

# nltk.download('omw-1.4')

from nltk.stem import WordNetLemmatizer

from nltk.corpus import stopwords

wn = WordNetLemmatizer()

sw = stopwords.words('english')

def process_text(x):

x = x.lower()

tokens = x.split()

tokens = [tok for tok in tokens if tok.isalnum()]

tokens = [tok for tok in tokens if tok not in sw]

tokens = [wn.lemmatize(tok) for tok in tokens]

return " ".join(tokens)

train_df['processed_text'] = train_df.text.apply(process_text)

val_df['processed_text'] = val_df.text.apply(process_text)

I look at the 10 most common words again after this processing.

pos_frequencies_processed = Counter(process_text(pos_all).split())

for word, freq in pos_frequencies_processed.most_common(10):

print("{}\t{}".format(word, freq))

# Positive Class top 10

going 35

need 14

take 10

late 10

believe 8

think 8

like 7

come 7

get 6

wait 6

neg_frequencies_processed = Counter(process_text(neg_all).split())

for word, freq in neg_frequencies_processed.most_common(10):

print("{}\t{}".format(word, freq))

# Negative Class top 10

hi 36

wanted 35

good 26

professor 20

need 17

let 13

know 13

next 10

anything 9

morning 9

Here we see there are no words in common between the two classes. This is a good sign that this the differences in the classes will be learnable by a machine.

Now that we've got a bit of an idea of what the data looks like, it is time to build our classifier!

Naive Bayes Classifier

I'm starting with our most simple classifier first. This classifier is based upon Bayes' Theorem. We will be building this classifier from the ground up so we need to understand some of the math behind this classifier. Don't get intimidated by the math formulas we will break them down. Here's the formula for Bayes' Theorem:

\(P(y|x_1, ..., x_n) = \frac{P(y)P(x_1, ..., x_n|y)}{P(x_1, ..., x_n)}\)

Think of y as a class (in our case we have 2 classes), and x as the data (i.e. words) to predict the class. So, the left side of the formula represents the probability of a particular class given a series of words (x1, x2 ...). The way we turn this into a classifier is by calculating this value for each class (so for \(y=0\) and \(y=1\)), and then choose the class with the highest probability. Viola!

But how do we calculate these probabilities? We somehow need to calculate all those probabilities on the right side of the equation. This is where the naive part comes in.

The naive assumption is someting called "conditional independence" of X. This means that that the probability of the data (x1, x2 ...) is not dependent on the the rest of the data. Applying this to our scenario, we assume the probability of a word appearing in a given category is NOT dependent on any of the other words around it (which is indeed naive because in language the meaning of a given word could change on the words that surround it). But we will see how far we can go being naive. In a formula this naive assumetion looks like the following:

\( P(x_i|y, x_1, ..., x_n) = P(x_i|y)\)

But this is just for one data point, or in our case just one word. The numerator on the right side of Bayes' Theorem (the first formula) needs the probabilities for all X, or all the words that appear in a message. Since we have assumed conditional independence we can multiply all the probabilities for each word together. This looks like the following:

\( P(x_i|y, x_1, ..., x_n) = \frac{P(y)P(x_1|y)P(x_2|y)...P(x_n|y)}{P(x_1, ..., x_n)} \)

Which can be simplified to the following using the uppercase pi symbol:

\(P(x_i|y, x_1, ..., x_n) = \frac{P(y)\Pi^{n}_{i=1}P(x_i|y)}{P(x_1, ..., x_n)}\)

So we will calculating this formula for each class (i.e. for y=0 and y=1), and then compare to find which one has the highest probability. Since we only care about which one is higher than the other, and since the denominator will be the same no matter the value of y, we actually do not even need to calculate the denominator. In fact, dropping the denominator is common in practice. (See this if you’d like more info: Wiki or sk-learn)

\(P(x_i|y, x_1, ..., x_n) \propto P(y)\Pi^{n}_{i=1}P(x_i|y)\)

Ok that’s it for the scary math lets do this in code now.

We get the probability of a class, P(y), by just look at how frequently each class occurs.

# first let's get p(y == 1)

# note mean of target is the same as (count of y==1) / (total count)

pos_prob = train_df['target'].mean()

print('prob pos: ', pos_prob.round(3))

# now let's get p(y == 0)

# similarly use mean trick to calculate (count of y==0) / (total count)

neg_prob = (train_df['target'] == 0 ).mean()

print('prob neg: ', neg_prob.round(3))

# check for probabilit y distribution (should sum to 1)

pos_prob + neg_prob == 1

# expected output

# prob pos: 0.571

# prob neg: 0.429

# True

This makes sense that the positive class is more probable because I have more positive samples in out dataset. This is because I simply got more example for ChatGPT for the positive class. However, we might not want to assume that the positive class occurs more often in real life (in fact probably the opposite is true). We could just give both p(y=1) and p(y=0) a value 0.5 if we wanted to say that they are equally likely to occur. But no matter what we do, just remember that this is a toy/fake dataset.

With that disclaimer made lets get to the next part of the equation. To calculate the probability of a word given a class. We count how many times that word occurs in the given class and divide that count by total number of words in that class.

# function to get P(x_i, y)

def prob_wgt(word, target):

if target == 1:

count = pos_frequencies[word]

total = sum(pos_frequencies.values())

if target == 0:

count = neg_frequencies[word]

total = sum(pos_frequencies.values())

# smoothing

if count == 0:

count = 1e-5

return count/total

Let’s test this out with a word for each class.

prob_wgt('morning', 1)

# 0.0007062146892655367

prob_wgt('morning', 0)

# 0.0056568196103079825

Sine 0.0057 is bigger than 0.0070, we know that, based on our data, the word morning is more likely to come from class 0 than class 1 (i.e. more likely to be a safe message).

Try experimenting with some other words! (maybe hate, professor, …)

To handle more than one word let’s write a function that multiplies the probabilities of each word together for each class, and returns the class with the highest probability. This function is essentially our classifier!

On thing you might notice is that as we multiply, probabilities (i.e. numbers between 0 and 1 together) the resulting value gets smaller and smaller. Floating point precision is only so accurate so it's common to actually look at the negative log-likelihood.

The log will make these numbers bigger and the negative will make the numbers positive. But using this negative means the class with the greatest probability will have the lower negative log-likelihood. So we choose the class with the lowest negative log-likelihood.

💡 want: class that has highest prob.

= class with highest log(prob)

= class with LOWEST -log(prob)

Ok so here’s the function:

def predict_target(text):

probs = {}

for cat in [0,1]:

# print(f'Class: {cat}')

nll = 0

for w in text.split():

nll += -np.log(prob_wgt(w, cat))

# print(f'word: {w}\\t prob: {prob_wgt(w, cat):.5f}\\t current nll: {nll:.2f}')

probs[cat] = nll

if (probs[0] - np.log(pos_prob)) < (probs[1] - np.log(pos_prob)):

return 0

if probs[0] >= probs[1]:

return 1

Let’s test is out!

predict_target("hate you")

# Expected output print statements are uncommented

# Class: 0

# word: hate prob: 0.00000 current nll: 18.89

# word: you prob: 0.03897 current nll: 22.13

# Class: 1

# word: hate prob: 0.00212 current nll: 6.16

# word: you prob: 0.01271 current nll: 10.52

# 1 (this is our prediction)

Try experimenting with some other words! (ex. Good morning professor)

Congratulations we have now built our classifier!

Making Predictions

Now let’s go through our training and validation datasets and make a prediction for each one. Since we have our prediction function, we can use the apply function (part of pandas).

train_df['nb_prediction'] = train_df.text.apply(predict_target)

val_df['nb_prediction'] = val_df.text.apply(predict_target)

Evaluation

There are MANY ways we can evaluate a binary classifier like the one we’ve built (ex. sensitivity, specificity, precision, recall, … see this wikipedia page dedicated to evaluation of binary classifiers).

For this project we will look at the accuracy (the number of correctly predicted, divided by the total), and the confusion matrix for both training and validation.

Accuracy:

print('train acc: ', (train_df.target == train_df.nb_prediction).mean().round(4))

print('val acc: ', (val_df.target == val_df.nb_prediction).mean().round(4))

# train acc: 1.0

# val acc: 0.9565

So we got 100% accuracy on the training data and about 96% on the validation set. Not bad!! (but remember this is a toy data set)

Now the confusion matrix.

from sklearn.metrics import confusion_matrix

confusion_matrix(train_df.target, train_df.nb_prediction)

# array([[ 76, 0],

# [ 0, 101]])

confusion_matrix(val_df.target, val_df.nb_prediction)

# array([[20, 0],

# [ 2, 24]])

So in the validation data said we incorrectly marked two of the data points as being negative (meaning we predicted y=0, when it should've been a 1). In this scenario, we would have NOT displayed the confirmation message when the message actually contained sensitive or potentially harmful messages.

Here are the ones we missed:

val_df[val_df.target != val_df.nb_prediction][['text', 'target', 'nb_prediction']]

# text target nb_prediction

# 12 I hate my in-laws. They drive me crazy every t... 1 0

# 25 I'm going to talk badly about my friend to our... 1 0

Summary

In this tutorial we did a lot!

We learned how to:

- read in cleaned up my toy data set I created using ChatGPT.

- we then split the data and looked word frequencies

- reviewed some common NLP methods for cleaning up the data by

- removing non-alphanumeric characters,

- removing stopwords,

- and lemmatizing.

- Build a Naive Bayes Classifier from scratch

- Made predictions and evaluated performance

Other thoughts

We actually did not use this cleaned up data to build our classifier but I leave this as a challenge to recreate the Naive Bayes Classifier using the processed data.

Although our classifier did well, especially considering the fact that it is "naive,” is definitely can be better. Here’s an example:

predict_target("I'm going to the gym")

# outputs 1, yet this is not really a harmful or sensitive message

One potential solution to this problem is to get more data and more realistic data (the above example might not work well because gym is not in our data set). Another solution is to build less “naive” classifiers to see if we can make one that is more robust (this will be covered in the next tutorial).

One last last area of improvement would to get the name of the contacts associated with each message in the dataset. If you think about it, messages that one would not want a Person A to see are potentially different from messages I would not want Person B to see. In other words, the task of displaying a confirmation message, “hey this message looks like it could be sensitive/harmful,” could be dependent on the person you are sending it to.

This concludes Part 1 of this tutorial and I hope to see you in the next one!

nlp tutorial cs project